Setting up a Kubernetes cluster the hard way is DONE.

This process was very educational. First, I deepened my understanding of how Kubernetes works under the hood. So that is worth the taking the plunge

I’d rate my kubernetes setup as a success with a few compromises. For one, I was using KodeKloud’s Github repo as a soft guide, but it’s pretty dated.

The guide is using v.1.13.0 of Kubernetes to bootstrap the cluster. Which is behind the current rev of 1.25.0.

I intended to set up 1.25, but ended up with v.1.23.0. So one compromise. There are many changes between 1.25 and 1.23. The biggest one a lot of flags are depricated and commands are removed in 1.25.

I chose 1.23, because I still fulfilled the purpose of learning how to bootstrap a cluster without the kubeadm tool. I’ll look into bootstrapping to version 1.25.0 at a later date.

I feel like I have a better understanding of how all of the Kubernetes pieces work together from this project. Especially since I had the challenges and road blocks in getting up and running.

Challenges:

TLS Certificates

Creating Ansible roles and templates

TLS certificates are fun. (sike!)

PKI infrastructure is pretty straight forward to get setup. I get the concept of private and public keys. We use them often, but generating these certs for kubernetes was a bit much. I see why automated solutions were created to do this for you. It’s very time consuming and scaling manually is a lot.

The first mistake in setting up TLS is I wanted to use fqdn for my cert config files. Kubernetes was not happy at all. That meant I had to go regenerate certificates to use IP addresses instead of fqdn.

Eventually the manually generated certs worked as intended, but another hiccup came when bootstrapping the certificates. (automating)

Setting up kubernetes to bootstrap the certs should be easy? Wrong things have changed since v.1.13.

That breaking point is the certificate signing request (csr) approval process. Following the instructions on the guide, the csr request never appeared because the kubernetes process changed.

I spent a few hours looking at the journalctl logs trying to find where I maybe missed a step.

I looked at everything from kube-apiserver.service , kubelet.service kubecontroller-manger.service, kube-proxy.service and a few others.

What threw me off, is the node was showing up when I ran kubectl get no I could see it and create pods, deployments, services.

Everything was working, except logs and exec. So I figured it was a missed step on my part. Maybe a typo somewhere.

The error message was x509 certificate error.

After a few hours, breaks, and frustration. I concluded I didn’t miss a step. Everything on my end looked good. So that brought me to check the github issues.

I found what I needed.

The magic was in adding --rotate-server-certificates=true to the kubelet unit file. Reload systemd daemon and restart the kubelet service.

The csr showed up to the party and I approved the worker nodes request.

problem solved. I could view logs and exec into the containers.

Ansible

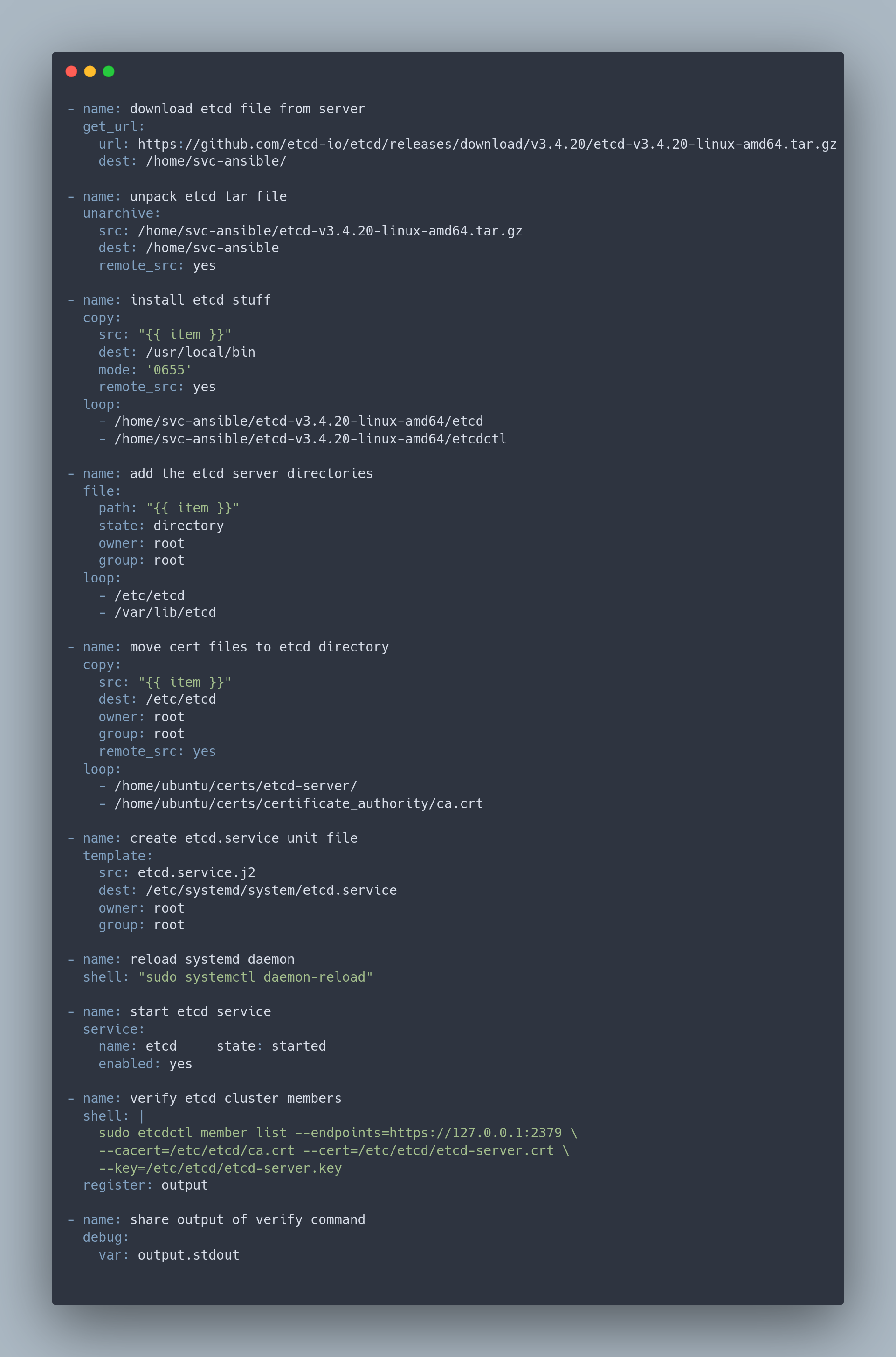

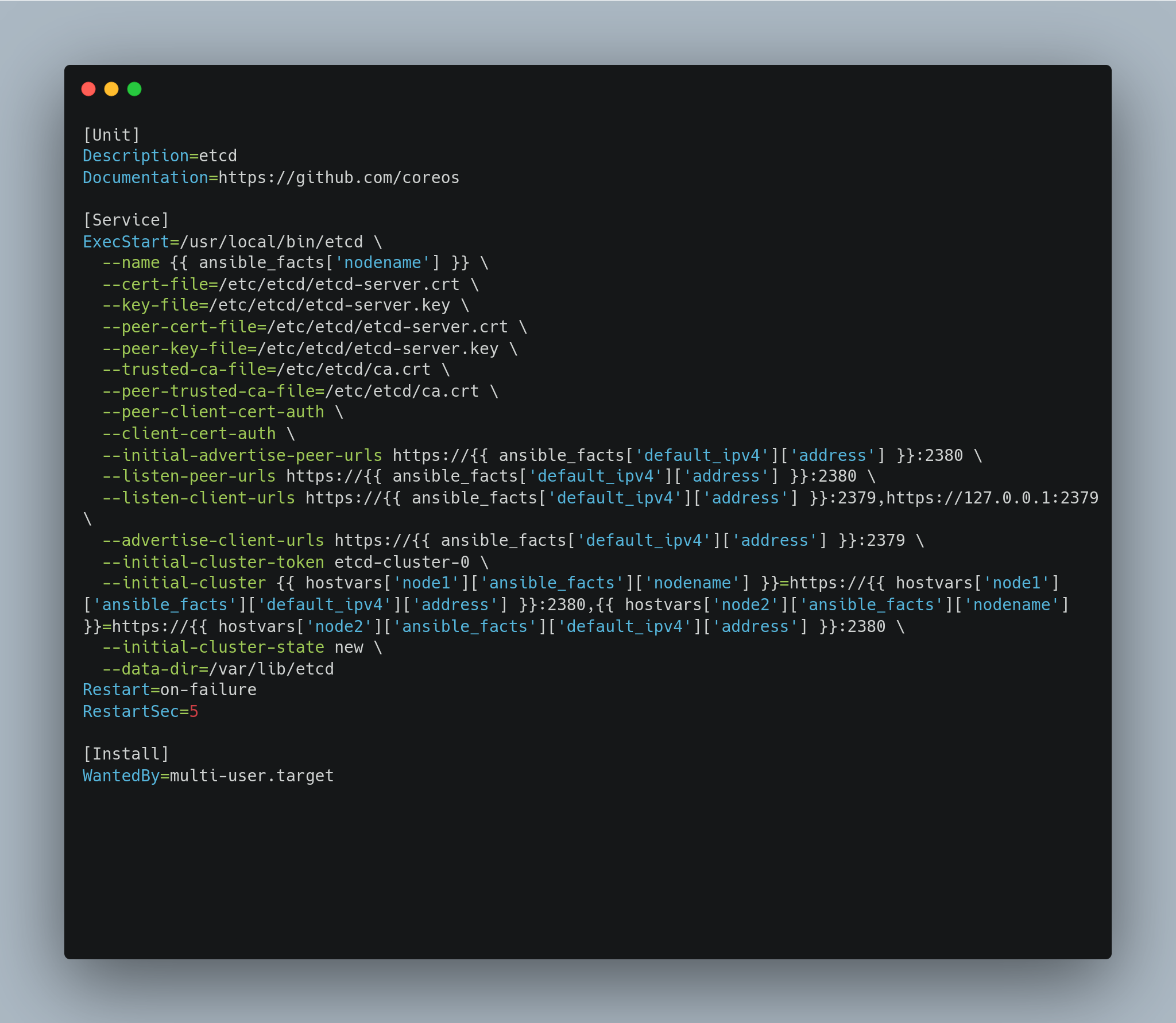

Creating Ansible playbooks was a challenge similar to above. Writing roles and playbooks while also updating outdated information is a challenge. Lots of research and googling.

I wrote roles for etcd, docker, control-plane (bootstrapping kube-api, kube-controller-manager, kube-scheduler).

I utilized snapshotting on xcp-ng. If the playbook was broken, I’d revert back to the healthy snapshot and make changes to correct the bugs until I had a working playbook.

This was a challenge of time utilization.

Was it necessary to spend time writing these roles? I will likely be using kubeadm to setup clusters in the future.

I’d say yes and no to that question.

I know existing roles exist for this stuff, but for the knowledge gain it was worth writing them myself. Writing them further cemented how these kubernetes objects work.

Smoke Tests

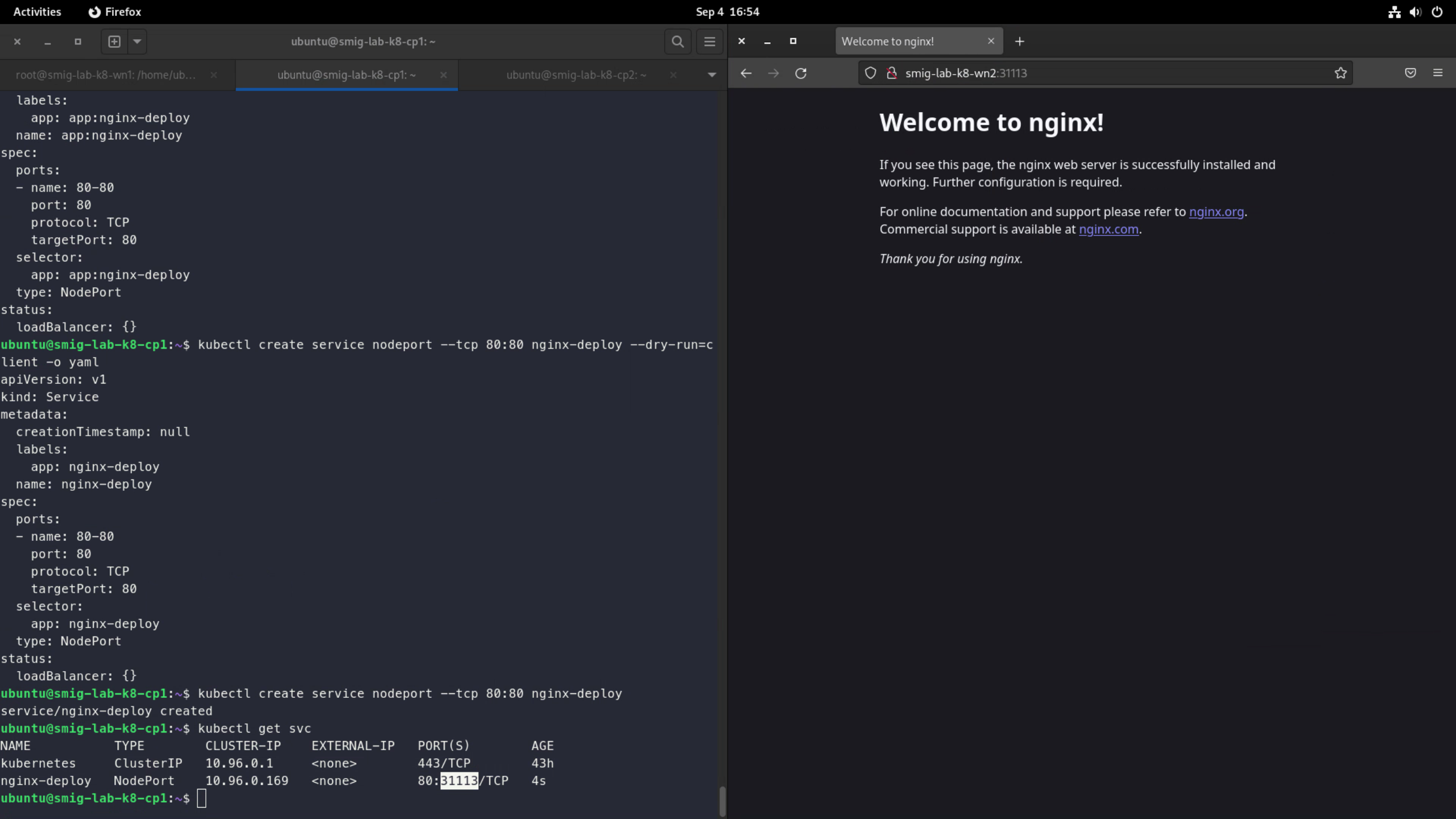

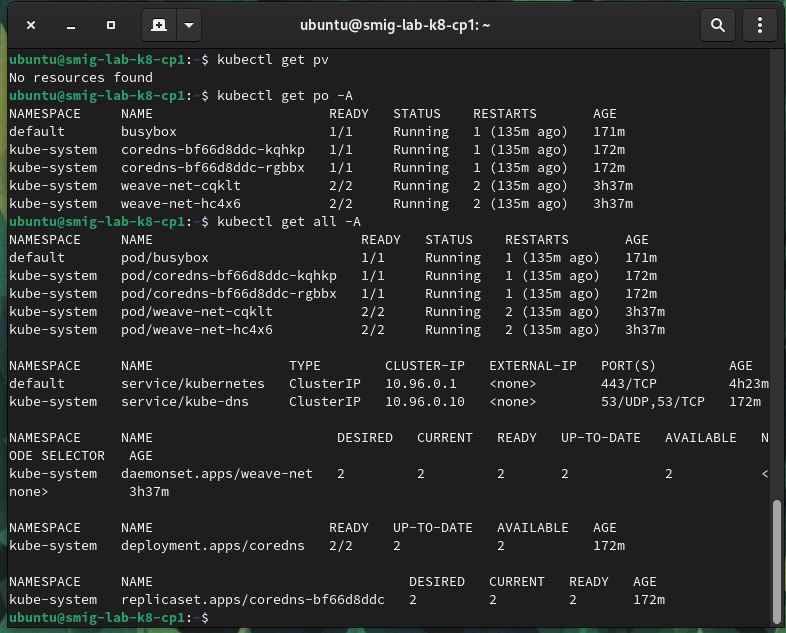

It was time to put my bootstraped cluster to the test. I did so by performing smoke tests. I tested encryption, deployments, services, logs, exec, and an e2e test.

Testing NodePort:

Testing kube-system pods:

My cluster showed out. I passed most of the tests. BUT, I would not use my cluster in production, just because it’s not the ideal setup for prod.

The biggest lesson I from this experience is I feel more prepared to take the CKA exam than before. I have a better understanding of kubernetes under the hood. I recommend bootstrapping a cluster the hard way to better understand kubernetes.