GitOps with ArgoCD: Building Cloud Infrastructure on Linode and OCI

A couple of months ago, I shared a dynamic DNS script I wrote in python. At the time I was preparing to self-host services from home. I’ve decided to switch gears to hosting some of those services in the cloud.

Why Choose Cloud Hosting for GitOps

It’s great hands-on experience building projects using terraform, GitOps, and Jenkins CI/CD in the cloud. Finding solutions to problems and while creating solutions is a great learning experience.

Implementation Overview

Step 1: Cloud Platform Selection and Infrastructure Planning

The number of cloud providers to choose from and the many price points is a bit daunting. For my needs, I decided to use Oracle Cloud Free Tier and Linode.

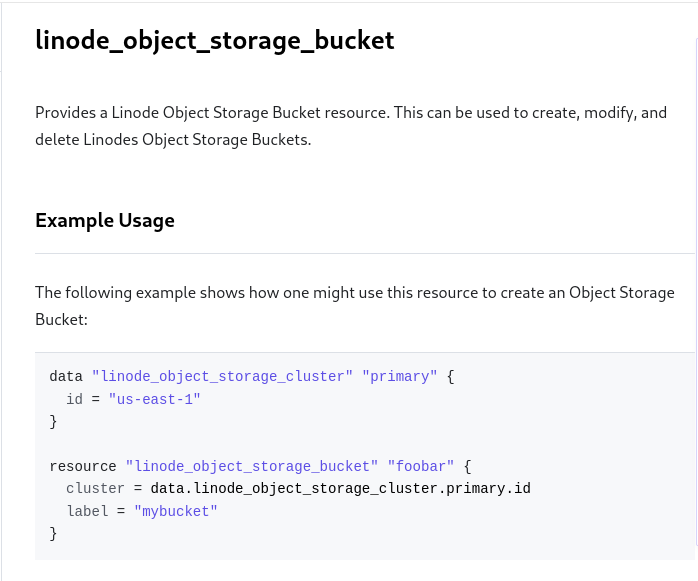

Terraform is the perfect solution to provision resources like the VCN, S3 bucket, and two virtual machine instances.

Using the Terraform docs and getting familiar with HCL (HashiCorp language), it’s not too difficult getting resources up and running with the OCI/Linode Terraform providers.

Step 2: Writing Terraform Manifests for Cloud Resources

Deciding on resource needs and writing Terraform manifests then checking those into Gitea took place at this step.

Virtual Machine Architecture

A docker host for basic services like Wireguard, Gitea, Authelia, and Vault Warden.

1 vCPU

1 GB RAM

A single node Kubernetes cluster using the OCI ARM Ampere A1 compute instance

4 vCPUS

24GB RAM

This is a snippet of the manifest used to deploy the k8s node. This easily checks into version control and offers great visibility. The cloud-init script finilizes the configuration.

# Single node Terraform OCI manifest

data "template_file" "user_data" {

template = file("./cloud-init.yaml")

}

resource "oci_core_instance" "master_node" {

availability_domain = var.availability_domain

compartment_id = var.compartment_id

display_name = "master_node"

shape = local.server_instance_config.shape_id

shape_config {

memory_in_gbs = local.server_instance_config.ram

ocpus = local.server_instance_config.ocpus

}

source_details {

source_id = local.server_instance_config.image

source_type = local.server_instance_config.source_type

}

create_vnic_details {

assign_public_ip = "true"

nsg_ids = [

]

private_ip = local.server_instance_config.master_ip

skip_source_dest_check = "false"

subnet_id = local.server_instance_config.subnet_id

}

metadata = {

"ssh_authorized_keys" = ""

"user_data" : base64encode(data.template_file.user_data.rendered)

}

}

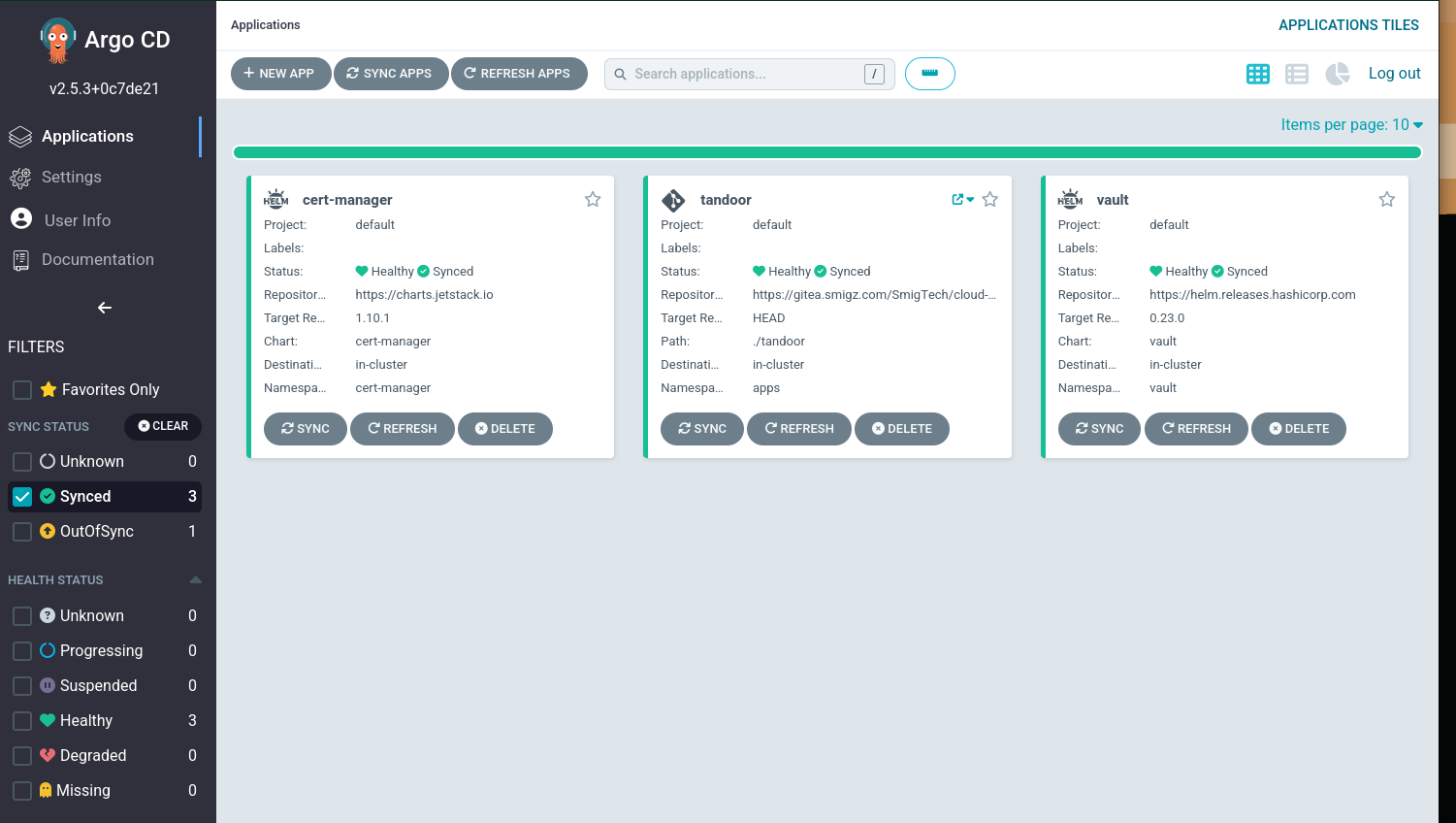

Step 3: Implementing GitOps with ArgoCD

Last year during a work conference, I was introduced to ArgoCD. A solution to implement GitOps on Kubernetes clusters.

For brevity, deploying ArgoCD is as simple as a kubectl application.

kubectl apply -f <argo yaml> then adjustments were made to fit my needs.

After the resources were ready, I started deploying GitOps solutions:

checked ArgoCD manifests into a self hosted Gitea repo.

deployed Cert-manager for automated TLS cert provisioning (ArgoCD/Helm)

deployed HashiCorp Vault for secret management (ArgoCD/Helm)

created application and helm chart repos in Gitea.

Conclusion and Next Steps

I hope this post inspires you to get started with GitOps in your labs.

If you’re new to Kubernetes, I recommend starting with my Complete Kubernetes Learning Pathto build a solid foundation before diving into GitOps.

For infrastructure automation that complements GitOps workflows, check out my

.I’d love to connect if you have any feedback. Hit the social icons on the homepage. :-)

See you on the next post!