I’m starting this Cloud Resume Api Challenge with two days left in the challenge window and using a cloud platform (Google Cloud) I’ve never used before—well, very little.

What could go wrong? lol

I will be attempting to get this done in two days. I’m starting this on Monday, July 29th, at 7:15 p.m. and this blog post will be written real time.

I’ve set up a public repo on GitHub for this challenge.

Step one. Setting up Terraform

I’ll need to get authenticated and authorized to the Google Cloud API to begin deploying the resources.

That means I’ll be going to the documentation:

https://cloud.google.com/sdk/gcloud/reference/auth/application-default

https://registry.terraform.io/providers/hashicorp/google/latest/docs

I’ve begun to set up the service accounts to provision the terraform resources. This is a chicken and egg situation. In a brand new cloud account there are no landing zones. Deployment accounts will need to be created either by the CLI or API manually likely.

I’ll be creating the service account via Terraform. I’m authenticed using the root account in the glcoud CLI. I’ll share a snippet of the terraform manifest below.

resource "google_service_account" "sa" {

account_id = var.service_account_id

display_name = var.service_account_display_name

}

resource "google_service_account_iam_binding" "admin-account-iam" {

service_account_id = google_service_account.sa.name

role = google_project_iam_custom_role.api_role.name

members = [

"serviceAccount:${google_service_account.sa.email}",

]

depends_on = [google_cloudfunctions_function.function, google_cloudfunctions_function_iam_member.invoker]

lifecycle {

replace_triggered_by = [google_cloudfunctions_function_iam_member.invoker]

}

}

resource "google_project_iam_binding" "service-account" {

project = var.project_name

role = google_project_iam_custom_role.api_role.name

members = [

"serviceAccount:${google_service_account.sa.email}",

]

}

# Link to the full file here:

# https://github.com/smiggiddy/cloud-resume-api-challenge/blob/main/terraform/iam.tf

As mentioned above, I’ve set CLI access so I can begin doing the local development using this command

gcloud auth login -> since this is my first time accessing anything on Google Cloud.

The first thing I need to do is get the list of regions and zones -> https://cloud.google.com/compute/docs/regions-zones/viewing-regions-zones

The API wasn’t enabled for the default project

[smig@pve-framework terraform]$ gcloud compute zones list

API [compute.googleapis.com] not enabled on project [foo]. Would you like to enable and retry

(this will take a few minutes)? (y/N)? y

Enabling service [compute.googleapis.com] on project [foo]...

Well, I hit the first snag.

Do you want to perform these actions?

OpenTofu will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

google_service_account.sa: Creating...

google_service_account.sa: Still creating... [10s elapsed]

google_service_account.sa: Creation complete after 11s [id=projects/myproejct/etc7.iam.gserviceaccount.com]

data.google_iam_policy.admin: Reading...

data.google_iam_policy.admin: Read complete after 0s [id=323996171]

╷

│ Error: invalid value for bindings.0.members.0 (IAM members must have one of the values outlined here: https://cloud.google.com/billing/docs/reference/rest/v1/Policy#Binding)

│

│ with google_service_account_iam_policy.admin-account-iam,

│ on iam.tf line 20, in resource "google_service_account_iam_policy" "admin-account-iam":

│ 20: policy_data = data.google_iam_policy.admin.policy_data

│

╵

ahh, this should be fixable, hopefully by using a depends_on block..

second guess, the policy is not being bound, but why not?

I did some Googling to determine which permissions are organization-based and which are project-based. Since I need a project base, I needed to exclude an additional perm. With some modifications to the module, things began working at a project level.

s/o to the author of this terraform module for assist.

Terraform strategies

Next, I’ve set a terraform resource to create an API key and output it to a directory, which I will use for the service account to create and provision the firestore table, the function, and the CI.

The idea is to have a Terraform directory for the IAM resources and a Terraform directory for the resume infrastructure, so I can separate the lifecycle of resources. The pipeline can manage both resources; however, it only makes changes if certain flags are in place.

I’m still using the console/cloud API to assist me and terraform docs. Create everything with Terraform locally, and I will destroy it before putting it in a pipeline to recreate. (with remote state for specific resources) Since I’m using opentofu, I will encrypt the state that is keeping the API key. (post edit: the key was removed. GCP has application credentials)

I’ve noticed that Google Cloud has enabled APIs for the various resources when navigating to them in the console.

Docs to get familiar with the firestore database:

Now, it’s time to create the cloud function.

I need to get familiar with the function and how to do the various tasks that it will require.

documentation used:

- https://cloud.google.com/functions/docs/writing/write-http-functions

- https://cloud.google.com/functions/docs/calling/http

directory structure docs

functions framework:

- https://github.com/GoogleCloudPlatform/functions-framework-python

- https://cloud.google.com/functions/docs/tutorials/terraform

Python time to create virtual env

❯ python3 -m venv lambda/venv

❯ . lambda/venv/bin/activate

❯ which pip

/home/smig/repos/GitHub/cloud-resume-api-challenge/lambda/venv/bin/pip

❯ pip install --upgrade pip

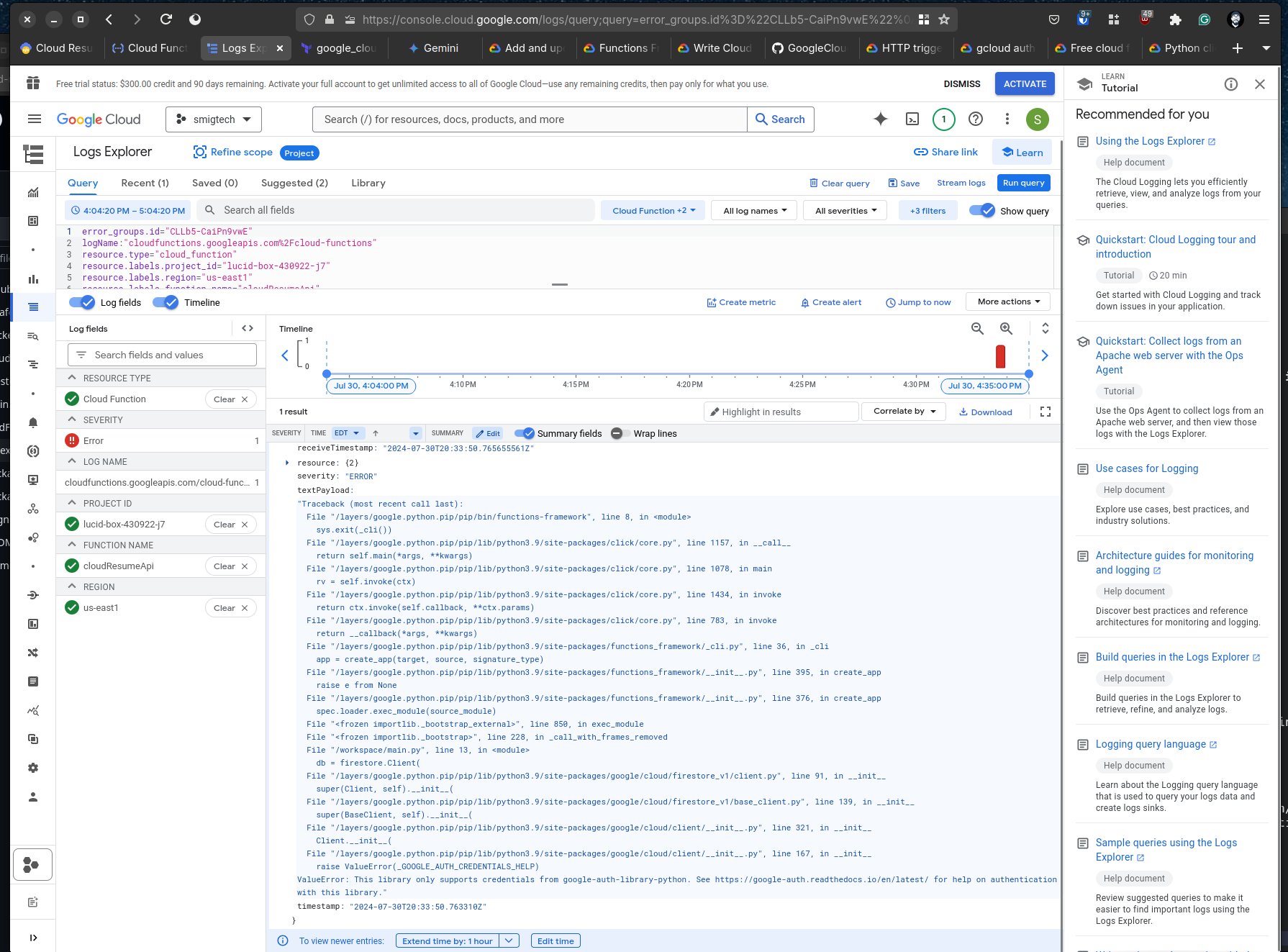

of course, a few errors

troubleshooting permission issues.

╷

│ Error: Error waiting for Creating CloudFunctions Function: Error code 3, message: Function failed on loading user code. This is likely due to a bug in the user code. Error message: Traceback (most recent call last):

│ File "/layers/google.python.pip/pip/bin/functions-framework", line 8, in <module>

│ sys.exit(_cli())

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/click/core.py", line 1157, in __call__

│ return self.main(*args, **kwargs)

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/click/core.py", line 1078, in main

│ rv = self.invoke(ctx)

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/click/core.py", line 1434, in invoke

│ return ctx.invoke(self.callback, **ctx.params)

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/click/core.py", line 783, in invoke

│ return __callback(*args, **kwargs)

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/functions_framework/_cli.py", line 36, in _cli

│ app = create_app(target, source, signature_type)

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/functions_framework/__init__.py", line 395, in create_app

│ raise e from None

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/functions_framework/__init__.py", line 376, in create_app

│ spec.loader.exec_module(source_module)

│ File "<frozen importlib._bootstrap_external>", line 850, in exec_module

│ File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

│ File "/workspace/main.py", line 13, in <module>

│ db = firestore.Client(

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/google/cloud/firestore_v1/client.py", line 91, in __init__

│ super(Client, self).__init__(

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/google/cloud/firestore_v1/base_client.py", line 139, in __init__

│ super(BaseClient, self).__init__(

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/google/cloud/client/__init__.py", line 321, in __init__

│ Client.__init__(

│ File "/layers/google.python.pip/pip/lib/python3.9/site-packages/google/cloud/client/__init__.py", line 167, in __init__

│ raise ValueError(_GOOGLE_AUTH_CREDENTIALS_HELP)

│ ValueError: This library only supports credentials from google-auth-library-python. See https://google-auth.readthedocs.io/en/latest/ for help on authentication with this library.. Please visit https://cloud.google.com/functions/docs/troubleshooting for in-depth troubleshooting documentation.

│

│ with google_cloudfunctions_function.function,

│ on lambda.tf line 21, in resource "google_cloudfunctions_function" "function":

│ 21: resource "google_cloudfunctions_function" "function" {

I figured out how to get authentication happy by passing it to the function as an environment variable. This isn’t the most secure option, so the next step is to figure out how to attach the service account directly to the function.

I’ve updated the Terraform manifest to attach the service account to the function. This allows the function to authenticate to the database without anything extra. perfect

NIIIICE, a happy moment. Another nuggest for GCP functions is a way to develop the function without building/shipping the function every time by using:

functions-framework --target <the method name>

Flask, oh how I’ve missed you

It’s been a while since I’ve used Flask, so using the docs to refresh on for the Python function:

After making code changes and I noticed my cloud function was not updating.

This led to a Google search that led to this issue.

As I continue to debug the function, it is now getting a 403. So, we need to figure out why the service account is failing to authorize the db 403 with Missing or insufficient permissions.

I figured out the permissions issue. The problem was my speed reading and lack of IAM context of Google Cloud.

Check out the snipper from the docs when things began to click!

When managing IAM roles, you can treat a service account either as a resource or as an identity. This resource is to add iam policy bindings to a service account resource, such as allowing the members to run operations or modify the service account. To configure permissions for a service account on other GCP resources, use the google_project_iam set of resources.

The action I needed is underlined for emphasis. Once configured according to the spec, things worked as expected.

Let’s get some user stories going

Time check-in, it’s Wednesday the last day of the challenge. As an early riser, I do my professional development in the morning usually around 4:30am.

Next, I’ll use ChatGPT to generate some sample data to enter into the DB based on the JSON schema.

Now that the API is working, here are some example user stories:

When a user hits the URL, it will, by default, return all resumes entered into the database and respond with each resume in an array.

When a user attempts a post request without the

resume-tokenheader with a token set, a 401 unauthorized is returned.For a post request, the user must set the header above and then have a JSON payload to upload. The API will return an error if the

Content-Typeis not set toapplication/json

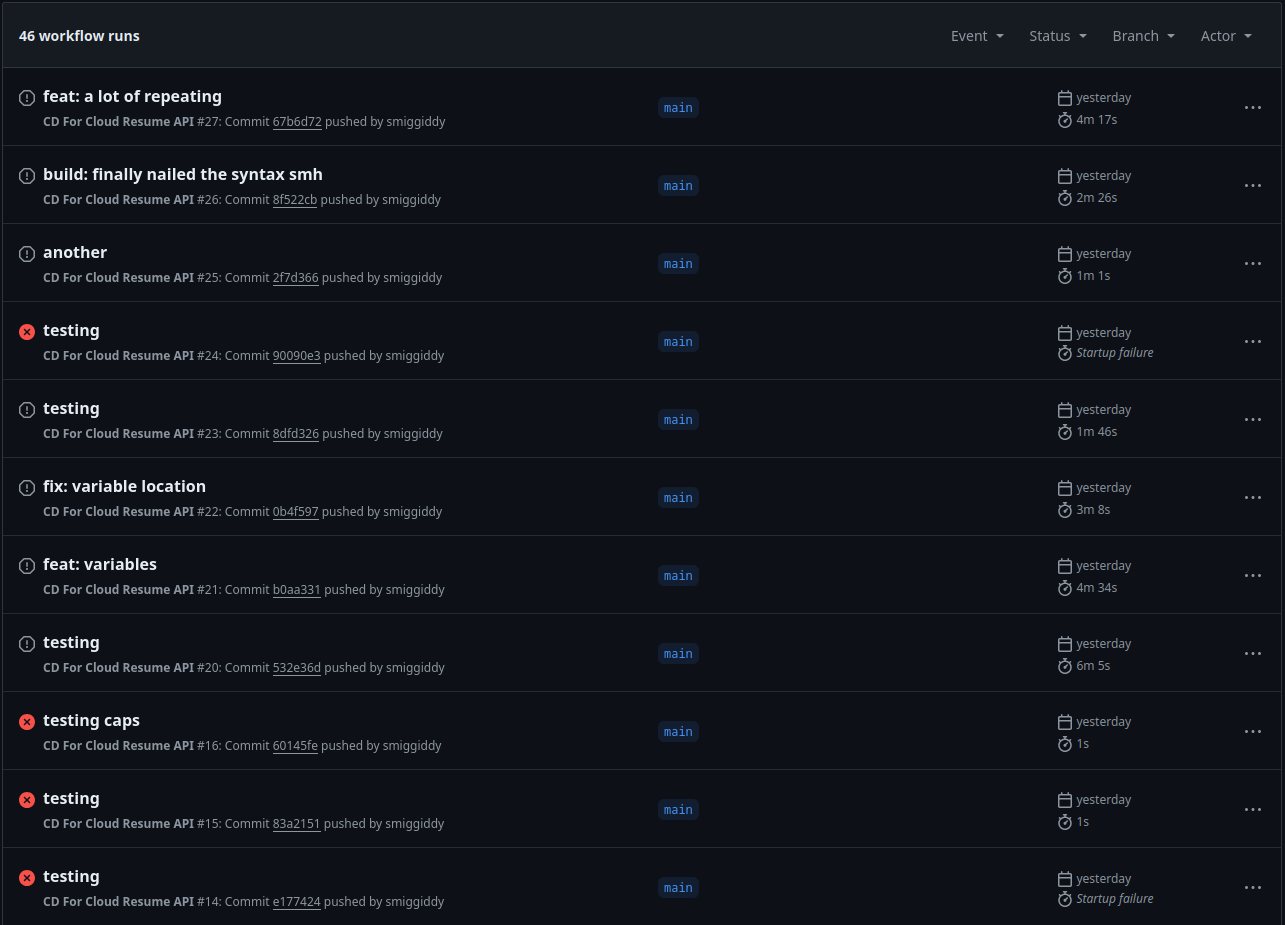

Oh boy, GitHub actions

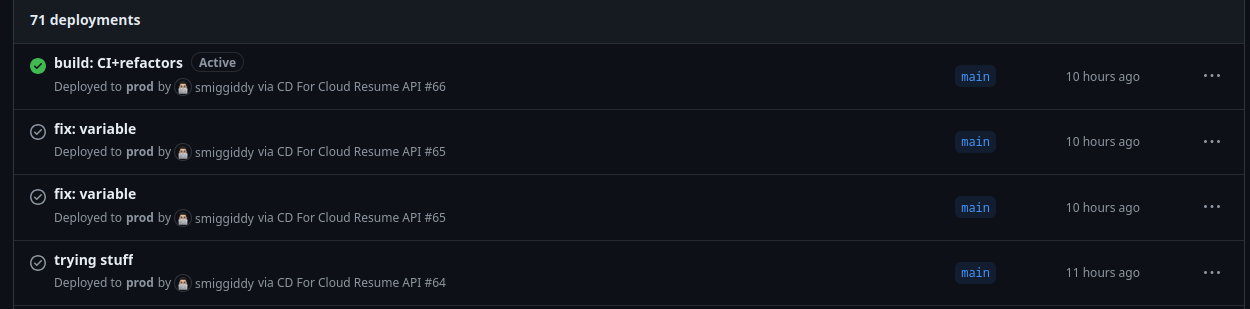

As the last piece of this project, I’ll need to get the GitHub Actions pipeline working and set OpenTofu to use a remote state.

You may have come to expect, that I’ll be using, you’ve guessed it documentation.

Using the official OpenTofu GitHub Setup Action:

- https://github.com/marketplace/actions/opentofu-setup-tofu

- https://developer.hashicorp.com/terraform/tutorials/automation/github-actions

another snag

I can’t for the life of me figure out how to reference environment/secrets with GitHub actions.

This is frustrating, lol. Using environment variables should be simple!

I’m using what I know should be valid yaml syntax and how to reference the variables.

fooVar: ${{ env.mikes_variable_that_wont_load }}

barSecret: ${{ secrets.that_no_one_can_use }}

Not understanding the full context of GitHub actions in this short time may be my undoing. I need to begin the work day and will pick this back up during lunch/after work.

Welp

Man, this took a while to get the pipeline working. It’s about 11pm EDT.

Man, this took a while to get the pipeline working. It’s about 11pm EDT.

I’m feeling pressure to submit it before midnight, and I honestly may be able to do it, but I’m going to hold off. I got caught up in the troubleshooting/learning that I forgot to live document the steps I was taking to solve it. Sorry! Let’s just say millions of tabs were open. lbs.

The TL;DR: when using workflows, variables and secrets need to be explicitly passed to the workflow. 😄

At least, learning was accomplished

Tech is something that you should take your time with. This experience was a great reminder of that. I’m happy with what I was able to accomplish in 2 days on a new CSP.

The API can be reached here: https://us-east1-lucid-box-430922-j7.cloudfunctions.net/cloudResumeApi

A few takeaways are:

- how to create something in GCP.

- I learned more about Google’s IAM policies. (The IAM stuff was the biggest hurdle for me and the GitHub actions syntax.)

- GitHub Actions

- Workload Identity Federation is really cool.

(opinion: I recommend using GitLab for CI. (Likely, for my familiarity with the tool. However, I’m open to learning more about GitHub Actions).)

Unfortunately, there just wasn’t enough time to submit this to be considered for the challenge. For submission, I would’ve loved to have:

- the blog post

- documentation

- pipeline validation

- testing

- implement some API features

However, this was a valuable experience. I learned more about Authentication, GCP (my first time using it), and GitHub actions + OpenTofu. I’ve been exclusively using Terraform and AWS/Oracle Cloud.

This challenge gave a chance to explore a new tool set.

I’m also coming away with using serverless functions for more things now. I can create many useful one-off tools/scripts that utilize HTTP functions. Also, GCP is generous allowing 2 million invocations for free. :-)

Conclusion

In closing, I plan to continue to build out this project. As I’m working on my Front-end JS/React/CSS skills, this project is a nice backend for those projects. I’ve created a CV generator in React.

This project is perfect for creating a resume database for tech job seekers. As I work on the React UI, it’s nice to have a backend database of candidates that could be readily available to recruiters or attendees of networking events.

Anyway, if you’ve made it this far, thanks for reading, and happy hacking. I hope you’ve enjoyed a piece of my personal development lab time.

Be sure to check out the API challenge for your learning. Let me know if you do.

https://cloudresumeapi.dev/